Using the Crowd to Automate QA

Written on October 3, 2016 – 6:11 am | by Emily Taylor

I’ve been thinking about QA recently. It’s the subject of this week’s Translation Management Systems class, and we’ve been assigned to watch a recording of a webinar on MQM (Multidimensional Quality Metrics). Furthermore, I attended the annual Silicon Valley localization unconference last Friday, and I thoroughly enjoyed the session about automated quality measurements. It had a lively (perhaps even heated) discussion.

MQM is a clever system that customizes 120+ QA issues to the user’s particular needs. It provides the user with a QA checklist tailored to their needs without having to actually evaluate all 120+ items, because while each issue is relevant to somebody out there, each one isn’t going to be relevant to every user. This is an efficient system, and I wish I’d known about it last semester when I was looking for a QA model to evaluate our Statistical Machine Translation engine. (We used LISA, which worked well enough, although some items weren’t particularly relevant.) But MQM still seems rather… manual. And since attending the unconference, I’ve been wondering: How can we make QA more automated?

Consider Ebay. They put out an extraordinary amount of machine translation, far too much to perform QA beyond sampling. But the problem with sampling is obvious. The randomly chosen sample could contain too few errors, or too many, giving an incorrect picture of the translation as a whole. But given that there is too much translation output to manually check more than a small sample, what other options would a company like Ebay have?

One solution would be to tap into its users as a form of QA crowdsourcing. Ebay already does this, but in an extremely limited way. Users can give an overall rating to the machine translated text by selecting a rating at the bottom of the page. It’s unclear how useful an overall rating would be in evaluating the quality of the machine translation on that page as a whole, and many users could mistakenly use it to rate how much they like the contents of the auction.

What I’m imagining is offering users of machine translated auctions a way to provide feedback much like Google Translate gives its users.

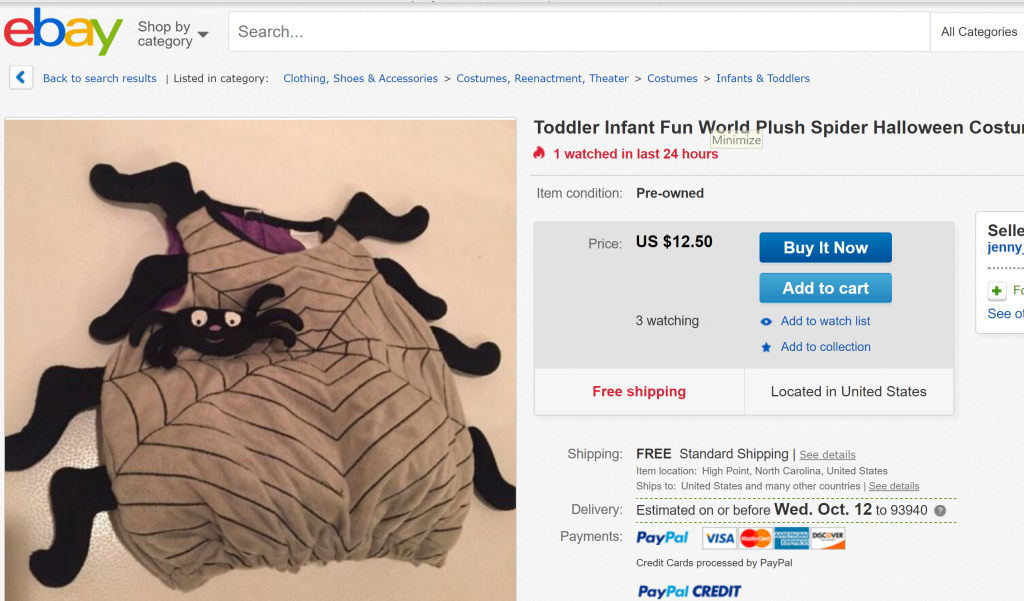

For example, take this Ebay auction:

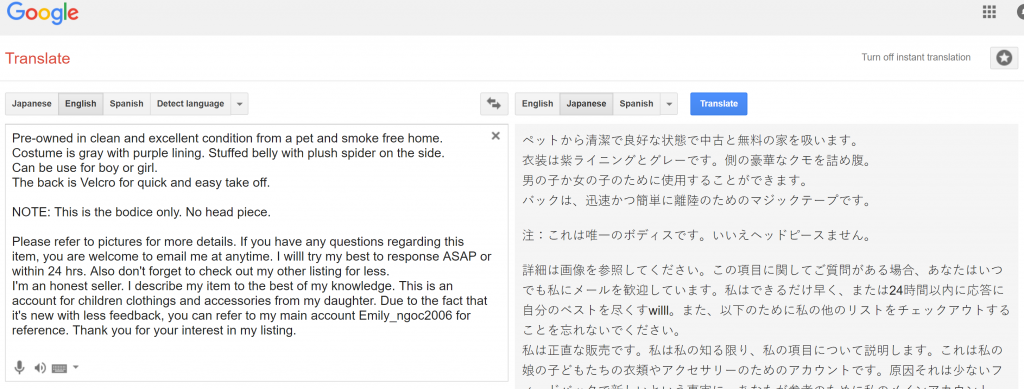

I’ve copied and pasted the text into Google Translate and set it to output Japanese:

Of course, the Ebay MT engine would be much better at processing this kind of user-generated content. Notice that Google Translate gets caught up on the typo “willl,” something that Ebay’s engine would most likely translate without issue.

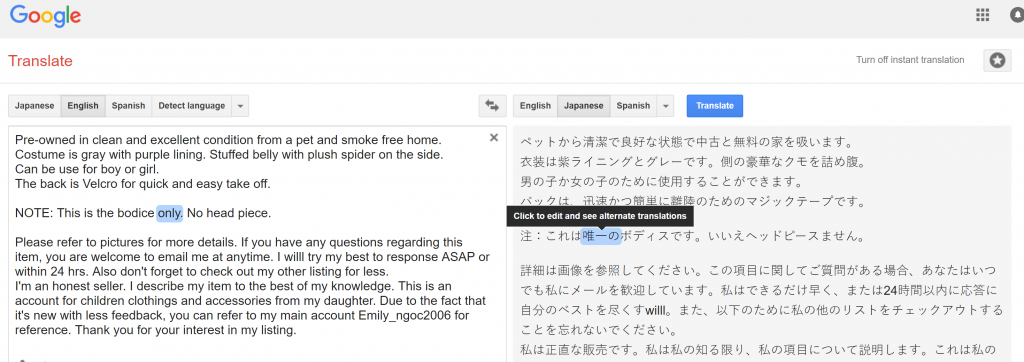

But here is where Google Translate excels:

I’ve highlighted the Japanese output for the word “only.” It is mistranslated here. The Japanese version says something along the lines of, instead of this being an auction for the bodice only, this auction is for the only bodice in existence. Since this is clearly a mass-produced costume, even a user who understands none of the source text would know that to be a mistranslation.

Thankfully, I can click on the oddly translated word and see additional options (and even an option to improve the translation myself!).

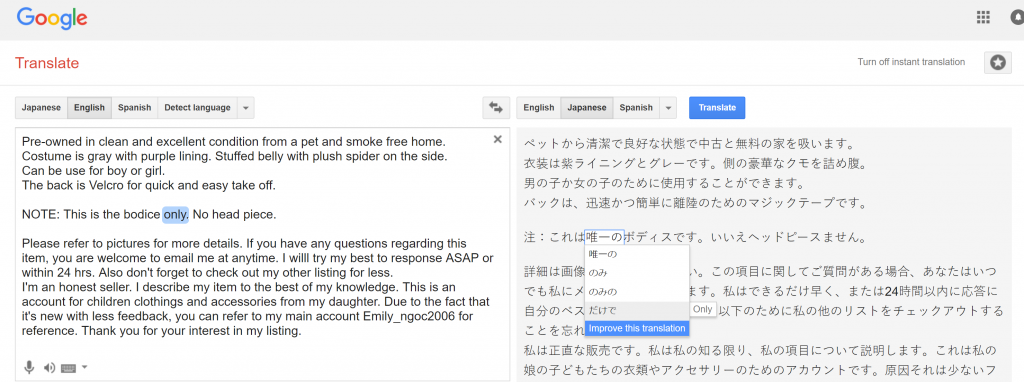

The other options listed here are closer to the correct translation.

Now, imagine if Ebay had a similar user interface on its machine translated auction pages. They look like normal auction pages, but the words can be selected just like in Google Translate. This could not only provide a more accurate translation to the user, who could select words that don’t seem to fit and potentially see more fitting ones, but this feedback could also provide a form of QA for Ebay to see how well their MT engine is doing, and they could even feed the improvements back into its MT engine.

Of course, not all of the feedback would be useful. There would be plenty of crackpots who play with the feedback system by selecting random (or purposely incorrect) words. But Ebay could avoid this problem by setting a large threshold before any of the feedback gets fed into the MT engine. Their large user base would allow them that luxury.

Machine translation is the perfect solution (indeed, the only solution in most cases) for user-generated content. However, given the unrestricted text we see in these cases, the quality of output still tends to be fairly low, and the quantity of output is far too large to do more than just a very small sample size when it comes to QA checks. But there are vast improvements that can be made if companies like Ebay or Facebook were to automate feedback from users in order to constantly evaluate the quality of their machine translation output and even to improve their machine translation engines.

No Responses to “Using the Crowd to Automate QA”