Introduction

In order to get a deeper understanding for how machine translation engines work and can be trained, I worked with a team to custom train an MT engine of our own. We intentionally chose a genre that we knew would likely not do well with machine translation – video gaming. Gaming localization frequently needs a high degree of creativity to be done well, so we were curious about how well a machine translation engine could measure up to the human touch. We used both Systran and Microsoft Custom Translator to train our NMT in iterative rounds. The details of our datasets and training process can be found in the project proposal.

Goals and Quality Evaluation

The overall purpose of the project was to get data in order to make an informed estimate on the resources required to train an NMT engine to translate games in the Legend of Zelda franchise from Japanese to English, and to explore the feasibility of using post edited machine translation (PEMT) for Zelda titles in the future. PEMT viability would be measured by 3 criteria:

- Efficiency: PEMT 30% fast than human translation

- Cost: PEMT 30% savings over human translation

- Quality: PEMT quality deemed acceptable through player evaluation of factors such as story and consistency in character speech style.

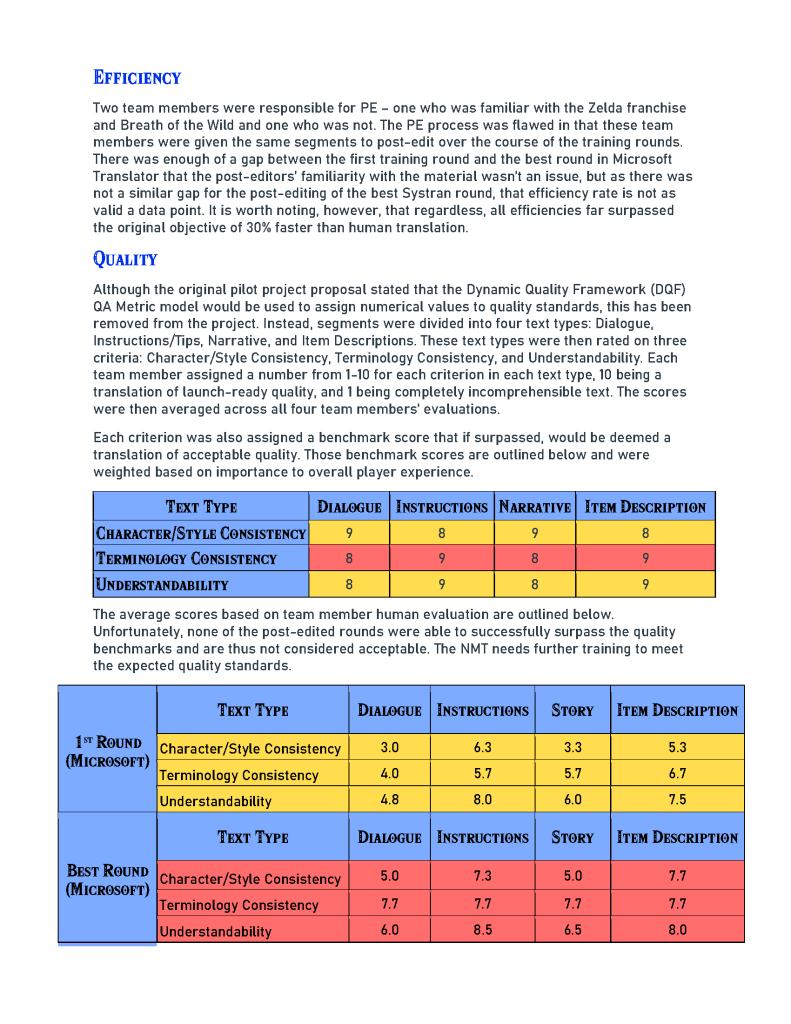

Instead of using an established framework to test quality, we created our own human evaluation method specific to this project. In our team, 2 people had experience playing the Zelda games and 2 didn’t. We each scored translations based on a series of criteria dictated by the reviewer’s experience and background knowledge of the games. These scores were then averaged and measured against our established quality benchmarks.

Results

Costs

We based calculations for cost of human translation on the assumption that a human translator would translate 300 words/hour and would be paid $0.12 per word. This rate was based on the market average for Japanese translations at the time of this project. Unsurprisingly, the savings in both time and money vastly outpaced even our proposed objective. However, this does not mean that the project was a success.

Quality

Upon evaluating the 1st training round completed in Microsoft Custom Translator, the best round (highest produced BLEU score) from Microsoft, and the best round from Systran, none of the criteria successfully met our quality benchmarks. This was also an unsurprisingly result, as we had assumed that the level of creativity necessary to create quality narrative and character styling would not be able to be replicated by an NMT.

Updated Proposal

After the training rounds were completed, we went back to our original pilot project proposal and updated it to reflect the results of the training, an accurate display of the costs necessary for the project, recommended additional training and translation settings, and the details of each training round and quality evaluation.