By: Jasmine Wang

Date: May 6, 2021

How Software Can Be Racist?

You may or may not notice that AI has penetrated into our daily life and has been adopted by all walks of life. But AI is not perfect and the technology is not immune to racial bias. Some AI software is programmed to be racist and thus will only worsen the matter instead of improving it.

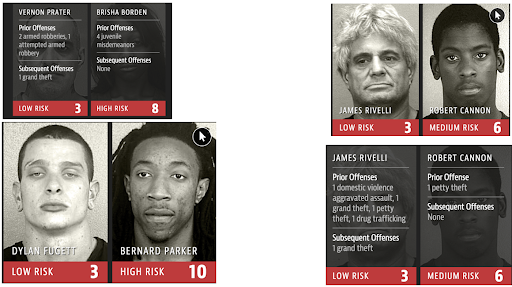

One way for the bias to occur is during the data collection process. The police force has been replacing officer intervention with Predictive Policing Tools to “control” the crime more effectively. The tools predict which areas and which groups of people are more likely to commit crimes or to reoffend after being released from the jail, based on the correlation of the demographics and the crime rate. That leads to a lot of police harassment in black neighborhoods, because the tools indicate that they are more “dangerous”, and unfair sentencing is given to black people, because they are presumed to be repeated criminals.

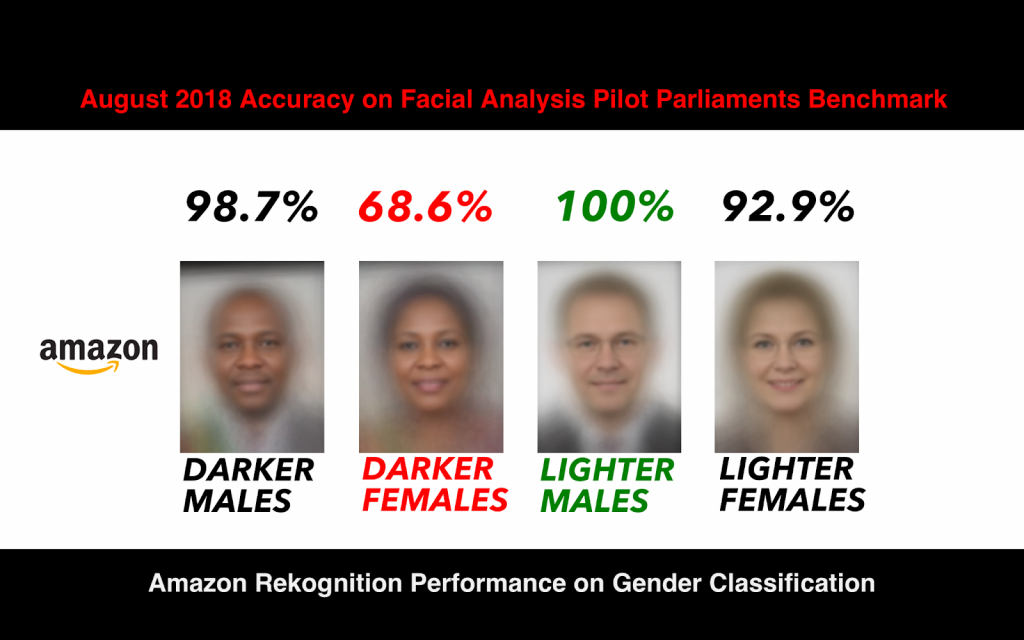

Another way for the bias to happen is when engineers code and design the software.. Tech company workforces are not often known to be racially diverse. Black people have reported that they have a hard time getting the facial recognition software to recognize their faces. It seems easier to recognize Asian and white people. It’s because most of the software developers are either white or Asian, and they build the program based on their own facial structure, often without testing it out on people outside of their races.

How do we combat racist AI?

The good news is that the world is generally optimistic towards technology, especially AI advancement. In order to facilitate the convenience AI can bring to our life, we can take some steps to make our software less biased:

1. Feed the machine with diversified data. Data is the core of machine learning, so in order to improve the machine, we need to start by improving the data. While sorting the training data, we should engage people of different races and design different questions for different demographic groups.

2. Add social science curriculum into the training program for software engineers. Racial equity awareness is as important as coding skills when it comes to creating software. The software should be programmed, from the start, to serve people of all races.

3. Increase diversity in the workplace. If the engineer team is composed of people from different racial backgrounds, they will come up with features that make sense to their own cultures and are applicable to their races. That way the software will be all-user-friendly.

References

https://towardsdatascience.com/racial-bias-in-software-772d6e949269

https://www.parkersoftware.com/blog/racism-in-technology-how-our-tools-acquire-bias/

You must be logged in to post a comment.