“High-quality translation is in greater demand today than ever before. Despite considerable progress in machine translation (MT), which has enabled many new applications for automatic translation, the quality barriers for outbound translations (i.e., translations to be published or distributed outside of an organization) have not yet been overcome. As a result, the volume of translation today fall far short of what is needed for optimal business operations and legal requirements. European industry, administration, and society all urgently need progress in translation technologies to fulfill existing translation needs, to extend multilingual communication to additional languages and services (e.g. to conquer untapped markets), and to reduce costs associated with commitments to linguistic diversity.”

—— QTLaunchPad

MQM, also known as Multidimensional Quality Metrics, is a framework that used in the QTLaunchPad software. But today we are not only focusing on what this software could do and what could be improved, the main point today is to show how and why we should use MQM in our translation process.

What is translation quality?

A qualified translation should include these three points:

- demonstrates required accuracy and fluency;

- for the audience and meet the purpose;

- complies with all other negotiated specifications;

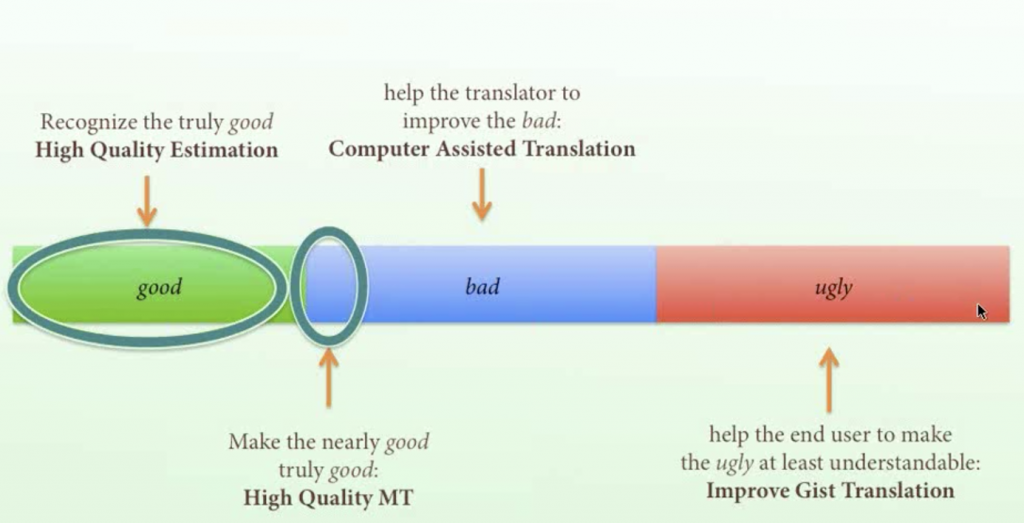

There is no doubt that every LSP is try to provide their clients with the best quality that they need. However, there is indeed sometimes that different people/ organizations have different bar about what is actually the “good”, “bad” or “ugly” translation, which means it is hard for us to evaluate the quality.

Why do we need to use multidimensional quality metrics?

In the new era of Machine Translation, a lot of incredible developers and linguists are making their effort to produce a high quality MT. But besides this, there’s some problems we need to take into account. Since there are different specifications and based on whether the work is done by human or the Machine, the problems could be divided by such:

Human metrics:

- not consistency: over 180 different issues checked, but there is little overlap;

- one-size-fits all approaches: it is not flexible to meet the different project needs;

- no comparison between projects;

- totally different from Machine Translation evaluation;

MT metrics:

In class we have a lot of BLEU, which is a metric that could indicate the translation result of the machine translation depending on different “feeds” from human. However, they might have the problem as below:

- they don’t indicate all kinds of problems. For example, the result might just obey the grammar, but it doesn’t make sense to human!;

- they are better suited to measure “bad” and “ugly” translation sectors: this is the same problem as the first one because when it comes to the context and human emotions, machine is not so good at doing it right now;

- the rankings are done by CS researchers and students that do not help translators at all: because translators don’t know how they can train the machine, if it’s only the people from another industry who helps us without us involved, there’s no point of doing that. It’s only training the machine.

MQM CORE

This is what MQM’s core is, there are three parts included: “Fluency”, “Accuracy” and “Verity”, there is also a “Design” in it, but in MQM core, they don’t use that. Also, after picking up an issue type is needed to be evaluated, there are 12 dimensions that we should take care of:

How to use MQM?

- defined your own metric based on dimensions;

- used a predefined metric;

- mark texts up or use a score card

summary

According to what we have mentioned above, if we want to evaluate the quality of the translation work, we need to first know about what specification we are talking about, because quality is relative to the specification. And since the quality is multidimensional, we need to know if it is “Fluency”, “Accuracy”, “Verity”, “Formatting” or “Engineering” issue.

Recent Comments